Duplicate content (Duplicate Content), that is, there are two or more pages with similar or the same content on the same website will be judged as duplicate content by the search engine.

If there is too much duplicate content on a website, it is highly likely that it will be judged as cheating by the search engine, thus affecting the search engine index and ranking.

This article will explain the definition of repetitive content, the influence of SEO and how to solve the problem of repetitive content.

What is repetition?

Duplicate content is similar or identical to the content on other sites or on different pages of the same site. A large amount of repetitive content on the site will have a negative impact on Google rankings.

In other words:

Repetitive content is the same verbatim content as what appears on another page.

But “repetitive content” also applies to content similar to other content. Even if it is slightly rewritten.

How does repetition affect SEO?

In general, Google does not want to rank pages with duplicate content.

In fact, Google declares:

Google strives to index and display pages with different information.

Therefore, if the pages on your site do not have clear information, it may damage your search engine rankings.

Specifically, these are the three main problems encountered by websites with a lot of repetitive content.

Less natural traffic: this is simple. Google does not want to rank pages that use content copied from other pages in the Google index.

(including pages on your own website)

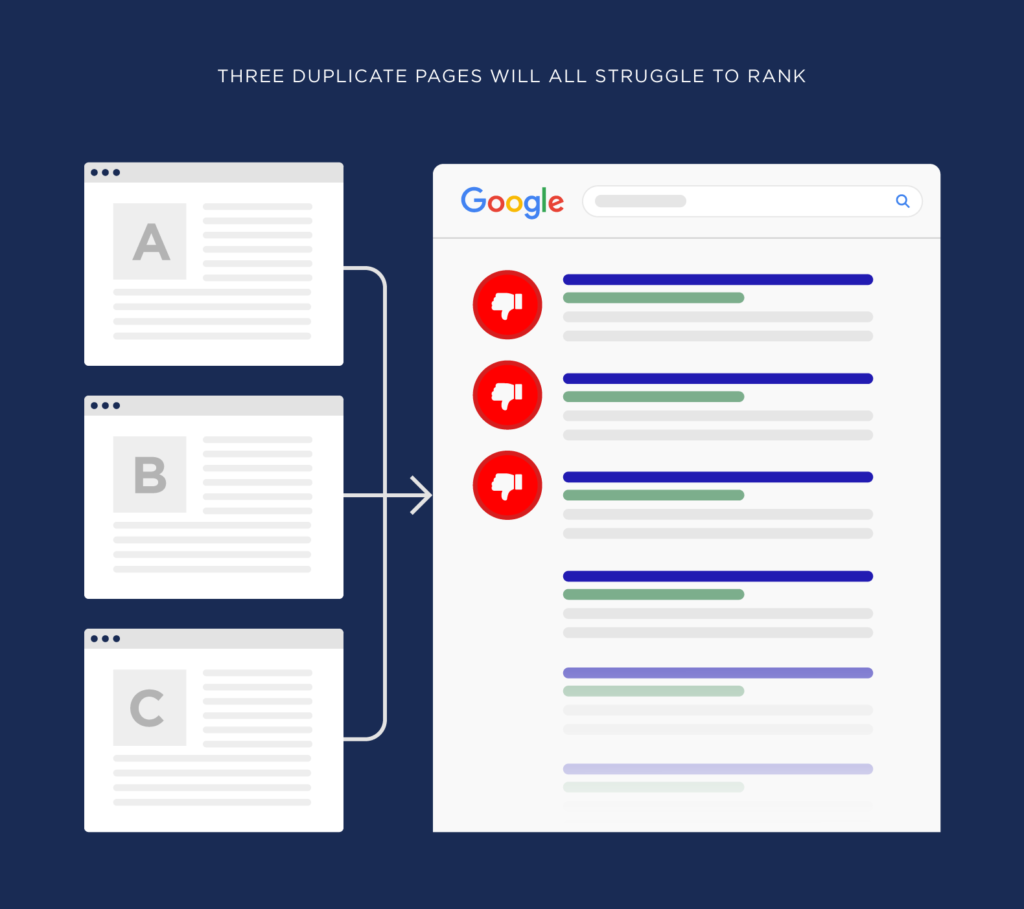

For example, suppose you have three pages with similar content on your site.

Google is not sure which page is the “original” page. As a result, all three pages will be difficult to rank.

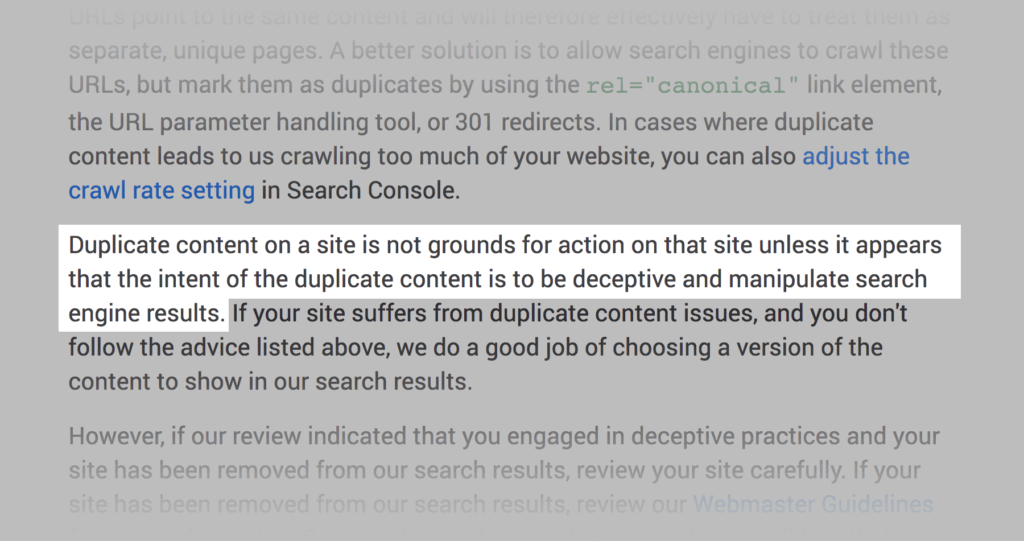

Penalty (extremely rare): Google has said that duplicate content may lead to penalty or complete cancellation of the site index.

However, this is very rare. And only if the site deliberately grabs or copies content from other sites.

Therefore, if you have a large number of duplicate pages on your site, you may not have to worry about “duplicate content punishment”.

Fewer indexed pages: this is especially important for sites with a large number of pages, such as e-commerce sites.

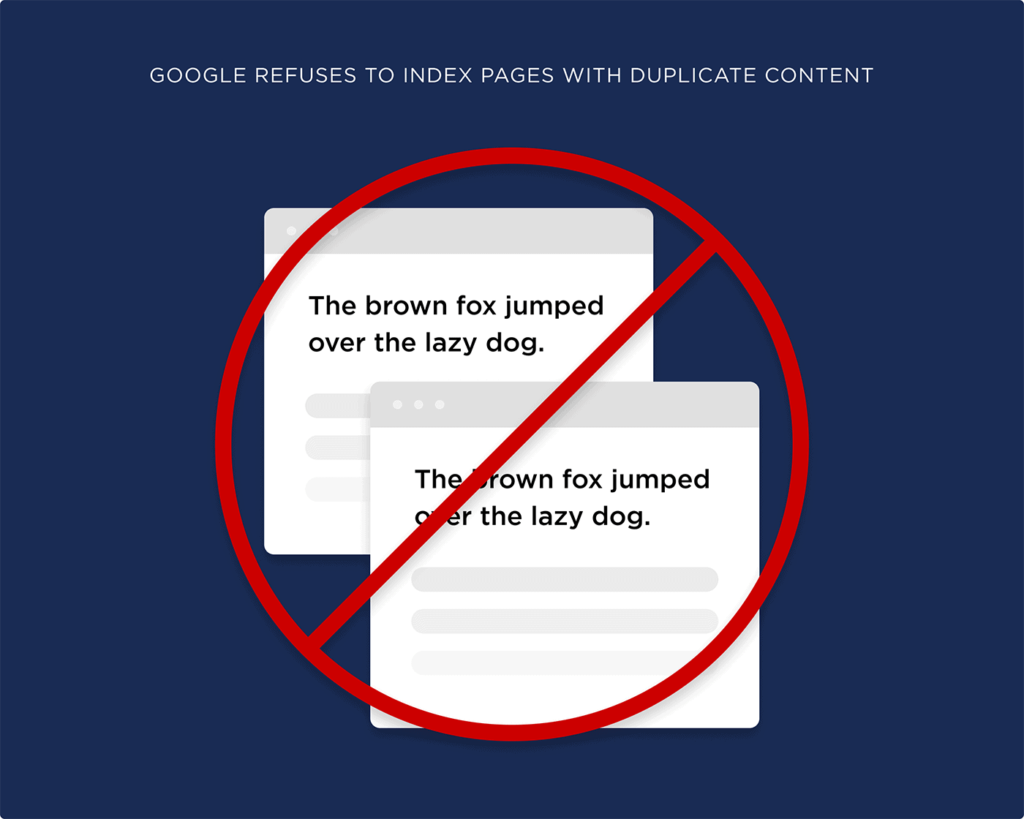

Sometimes Google doesn’t just lower the ranking of repetitive content. It actually refuses to index it.

Therefore, if the pages on your site are not indexed, it may be because your crawling budget is wasted on duplicate content.

Best practic

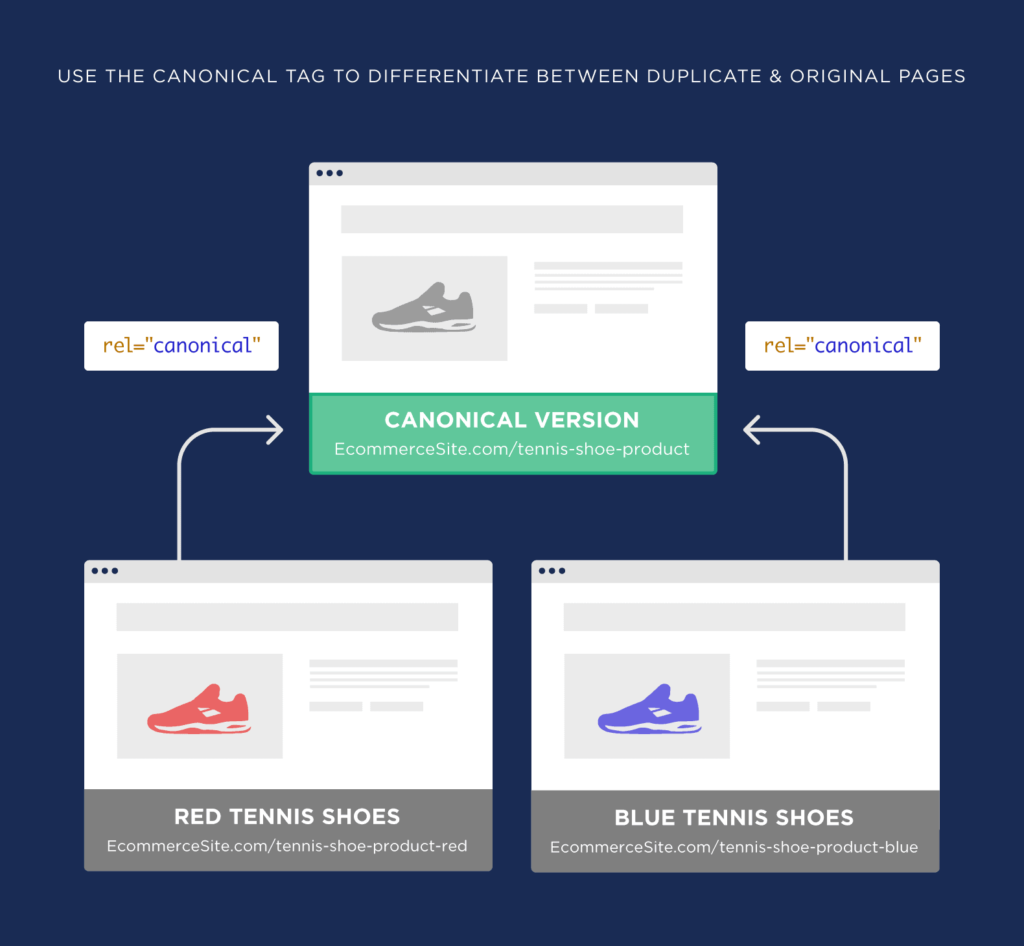

Note the same content on different URL

This is the most common cause of popping up duplicate content problems.

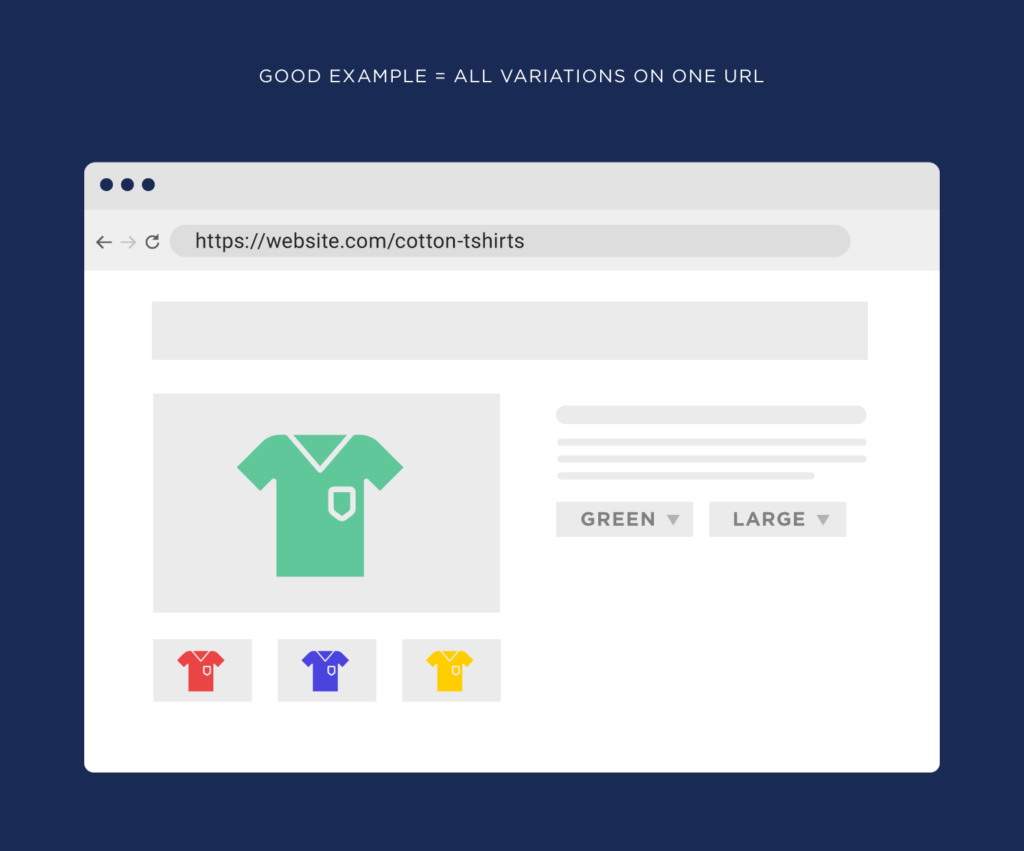

For example, suppose you run an e-commerce site.

You have a product page that sells T-shirts.

If everything is set correctly, each size and color of the T-shirt will still be on the same URL.

But sometimes you will find that your website will create a new URL for each different version of your product. This results in thousands of duplicate content pages.

Another example:

If your site has a search function, these search results pages can also be indexed. Again, it is easy to add more than 1000 pages to your site. All of this contains repetitive content.

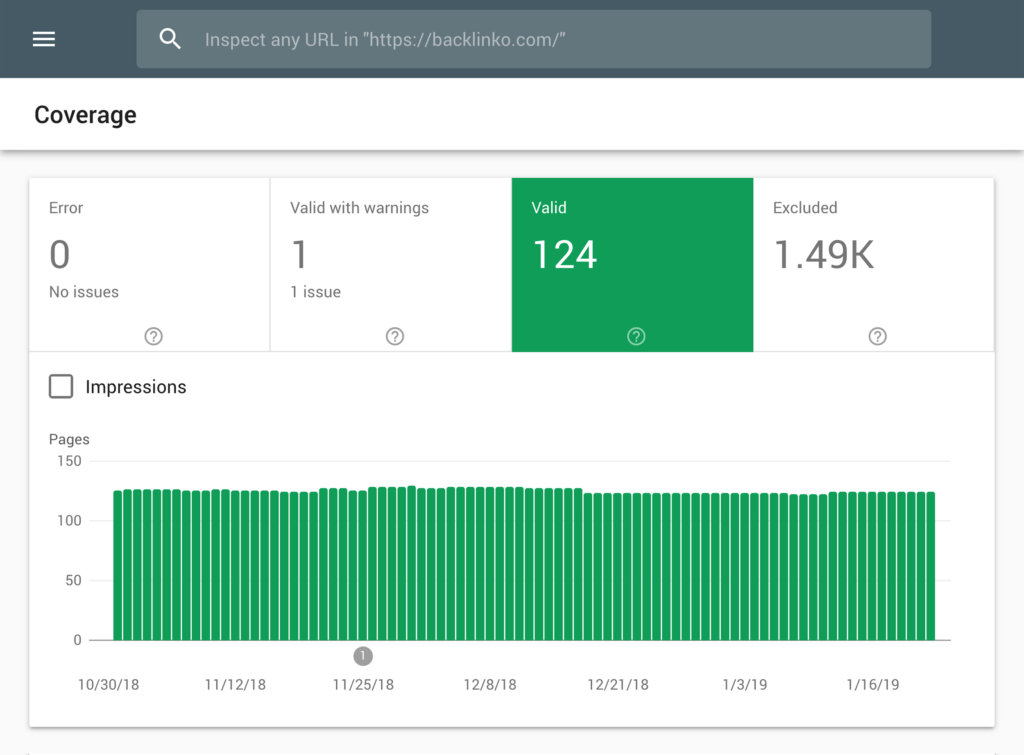

Check the index page

One of the easiest ways to find duplicate content is to look at the number of pages in your site indexed in Google.

You can do this by searching for site:example.com in Google.

Or view your index page in Google Search Console.

Either way, this number should match the number of pages you create manually.

If the number is 16000 or 160 million, we will know that many pages are added automatically. These pages may contain a lot of repetitive content. (of course, not absolutely. )

Make sure your website is redirected correctly

Sometimes, not only do you have multiple versions of the same page… And it’s multiple versions of the same site.

Although it is rare, I have seen it many times in the wild.

This problem occurs when the “WWW” version of your site is not redirected to the “non-WWW” version.

(or vice versa)

If you switch your site to HTTPS… This can also happen if the HTTP site is not redirected.

In short: all different versions of your site should end in the same place.

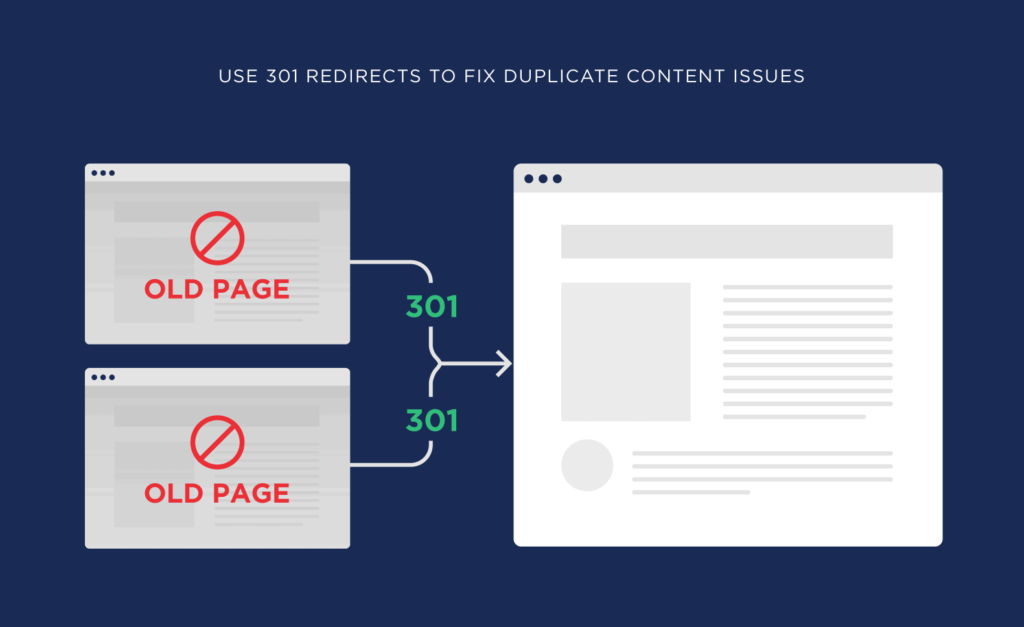

Use 301 redirection

301 redirection is the easiest way to solve the problem of duplicate content on your site.

(except for completely deleting the page)

So, if you find a bunch of duplicate content pages on the site, redirect them back to the original page.

Once Googlebot stops, it handles redirects and indexes only the original content.

(this can help the original page start ranking)

Pay attention to similar content

Repetitive content doesn’t just mean content copied verbatim from somewhere else.

In fact, Google defines repetitive content as:

Therefore, even if your content is technically different from existing content, you may still encounter duplicate content problems.

For most websites, this is not a problem. Most websites have dozens of pages. They write something unique for each page.

However, in some cases, “similar” repetition may occur.

For example, suppose you run a website that teaches people how to speak French.

You serve the greater Boston area.

Well, you may have a service page optimized around keywords: “Boston Learning French”.

There is another page trying to rank Cambridge Learning French.

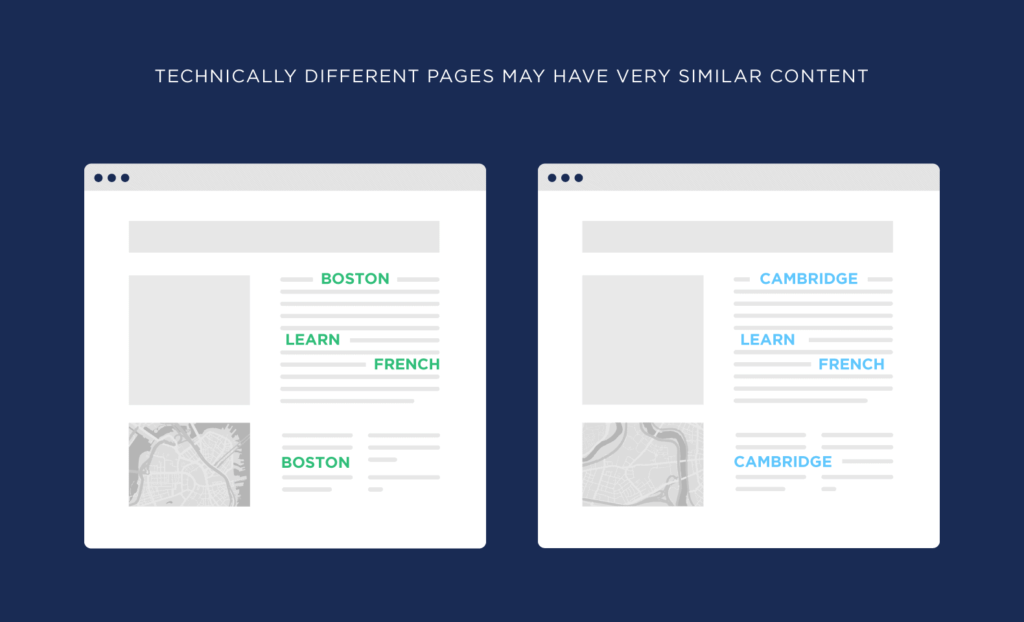

Sometimes the content is technically different. For example, one page lists the location of Boston. There is a Cambridge address on the other page.

But in most cases, the content is very similar.

This is technically repetitive.

Is it painful to write 100% unique content for each page on your site? Right. However, this is necessary if you are seriously considering ranking every page on the site.

Use specification tags

The rel=canonical tag tells search engines:

“Yes, we have a bunch of duplicate pages. But this page is original. You can ignore the rest.

Google says canonical tags are better than blocking pages with duplicate content.

(for example, use the noindex tag in robots.txt or web page HTML to block Googlebot)

Therefore, if you find a bunch of pages on the site that contain duplicate content, you can:

- Delete them.

- Redirect them

- Use specification tags

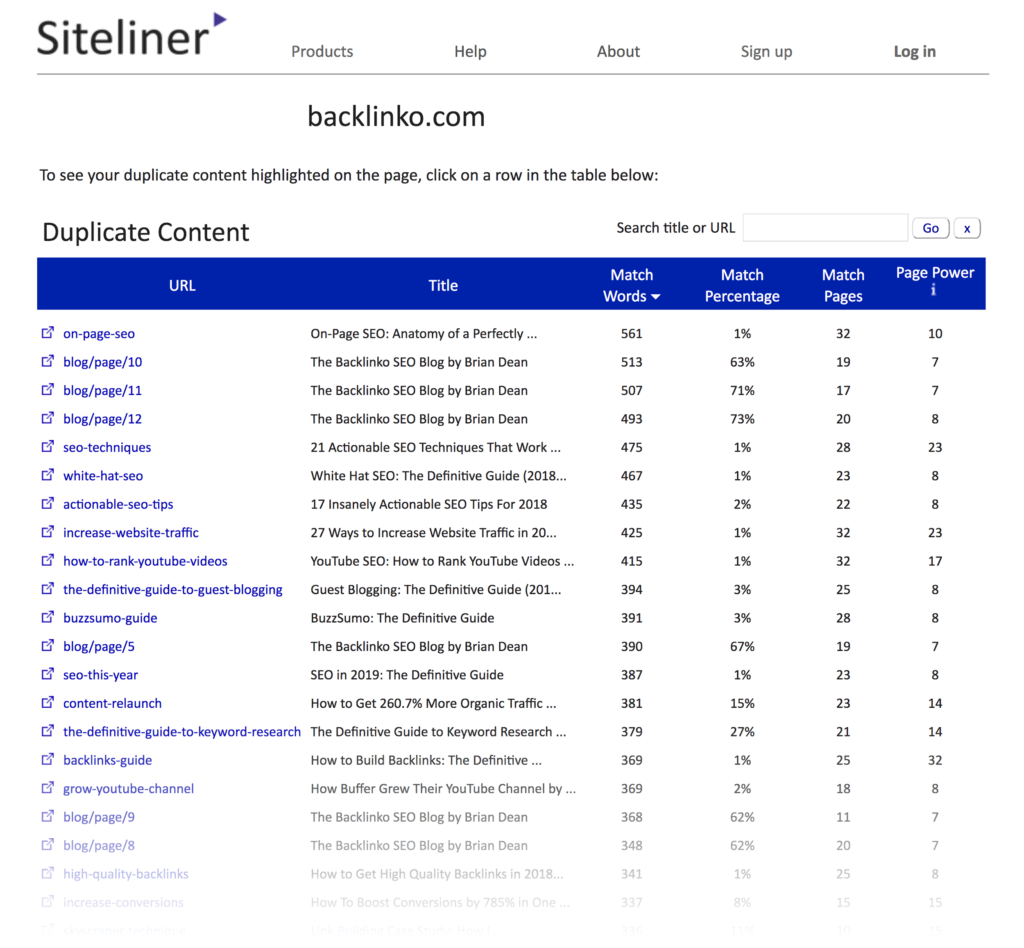

Use the tool

Some SEO tools have features designed to find duplicates.

For example, Siteliner scans your Web site for pages that contain a lot of duplicate content.

Merge pages

As I mentioned, if you have many pages that contain directly duplicated content, you may want to redirect them to one page.

(or use the specification label)

But what if you have a page with similar content?

Well, you can make unique content for each page. Or merge them into one giant page.

For example, suppose you have three technically different blog posts on your website. But the content is almost the same.

You can combine these 3 articles into a wonderful blog post that is 100% unique.

Because you have removed some duplicate content from the site, the ranking of this page should be better than the other three pages combined.

Do not index WordPress tags or category pages

If you use WordPress, you may have noticed that it automatically generates tags and category pages.

These pages are a huge source of repetitive content.

So they are useful to users, and I suggest adding a “noindex” tag to these pages. In this way, they can exist without a search engine index.

You can also set it in WordPress so that these pages are not generated at all.

Learn more

How does Google handle duplicate content? Video from Matt Cutts of Google on how Google can view duplicate content (ladder required).

The myth of repetition punishment: this article outlines why most people don’t need to worry about “repetition punishment”.