For most website operators or webmasters, they need constant updates to keep their content fresh and improve their SEO rankings.

, however, some sites have hundreds or even thousands of pages, which is a challenge for teams that manually push updates to search engines. If content is updated so frequently, how can the team ensure that these improvements have an impact on their SEO rankings?

, this is where web crawlers play a role. A web crawler will crawl your site map to get new updates and index the content to the search engine.

in this article, we will outline a comprehensive list of web crawlers, covering all the web crawlers you need to know. Before we go any further, let’s define web crawlers and explain their functions.

- what is a web crawler? How does the

- web crawler work?

- what are different types of web crawlers?

- ‘s most common web crawler

- SEOers 8 commercial crawlers

- I need to intercept and protect against malicious web crawlers?

what is a web crawler?

web crawler, also known as web spider, is a kind of web robot used to browse the world wide web automatically. Its purpose is generally to compile a network index. Web search engines and other sites update their own website content or their index to other websites through crawler software. Web crawlers can save the pages they visit so that search engines can generate indexes for users to search later. The process of the crawler visiting the website consumes the target system resources. Many network systems do not acquiesce in the work of reptiles. Wikipedia

Web crawlers, web spiders, or search engine robots download content from the entire Internet and index it. The goal of the robot is to learn the content of (almost) every web page on Web so that it can retrieve relevant information when needed. These robots are called “Web crawlers” because crawling is a technical term used to describe automatic access to websites or data through software programs.

these robots are almost always operated by search engines. By performing a search algorithm on the data collected by the crawler, the search engine can provide relevant links in response to the user’s search query and generate a list of displayed web pages after the user enters the search into Google or Bing (or other search engines). The

crawler robot is like a person, browsing through all the disorganized books in the library and sorting out card catalogs so that anyone visiting the library can find the information they need quickly and easily. To help classify and organize library books by theme, organizers will browse the title, abstract, and some internal text of each book to understand its summary.

web crawler is a computer program that automatically scans and systematically reads web pages and indexes web pages for search engines. Web crawlers are also known as search spiders or robots. In order to make the search engine provide the latest and relevant web pages to the users who initiate the search,

must have the crawling of the web crawler robot. This process sometimes happens automatically (depending on the crawler and the settings of your site), or it can be started directly.

many factors affect the SEO ranking of your pages, including relevance, backlinks, virtual hosts, and so on. However, it doesn’t matter if your page is not crawled and indexed by search engines. That’s why it’s so important to make sure your site allows correct crawling and to remove any obstacles that stand in their way.

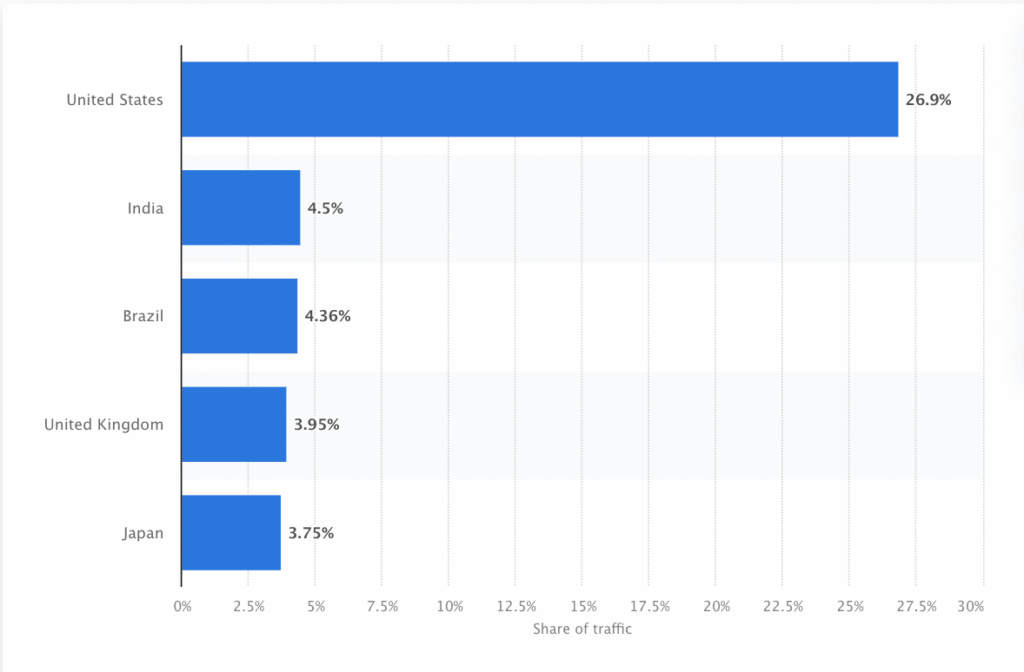

web crawlers must constantly scan and crawl the web to ensure that the most accurate information is presented. Google is the most visited website in the United States, with about 26.9% of searches coming from American users.

Google search users are mainly in the United States (source: Statista)

, however, there is not a web crawler that crawls information for each search engine. Each search engine has unique advantages, so developers and marketers sometimes compile a “crawler list”. This list of crawlers helps them identify different crawlers in the site log in order to accept or block them.

website operators need to collate crawler lists of different web crawlers and learn how they evaluate their sites (unlike crawlers who steal content) to ensure that they correctly optimize landing pages for search engines. How does the

web crawler work?

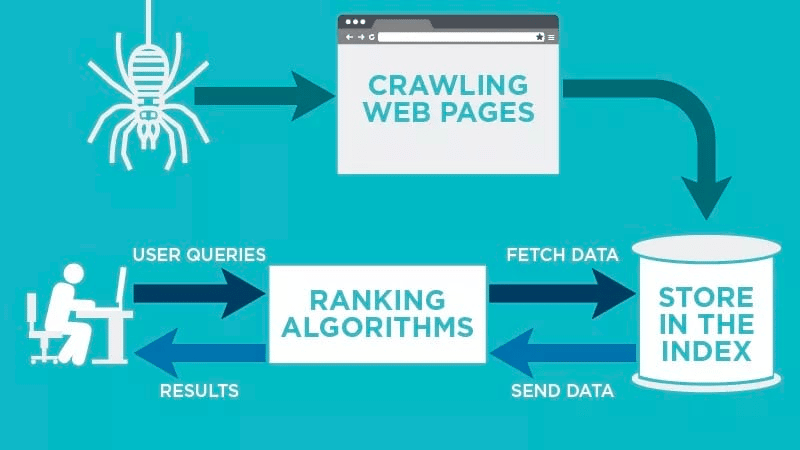

web crawlers work by discovering URL, reviewing and categorizing web pages. In the process, they will find hyperlinks to other pages and add them to the list of pages to be crawled next. Web crawlers are smart enough to determine the importance of each web page. The web crawler of the

search engine probably won’t crawl the entire Internet. Instead, it will determine the importance of each page based on a variety of factors, including how many other pages are linked to the page, page views, and even brand authority. As a result, the web crawler decides which pages to crawl, in what order, and how often to crawl updates. The

web crawler automatically scans and indexes your data after your page is published.

crawlers look for specific keywords related to web pages and index information for Google, Bing and other related search engines.

Crawling a web page is a multi-step process (Source: Neil Patel)

for example, if you have a new web page or make changes to an existing web page, the web crawler will notice and update the index. Or, if you have a new page, you can ask the search engine to crawl your site.

when a web crawler is on your page, it looks at copies and meta tags, stores that information, and provides Google with an index to classify keywords.

before the whole process begins, the web crawler will look at your robots.txt file to see which pages need to be crawled, which is why it is so important for technical SEO.

finally, when a web crawler grabs your page, it determines whether your page will appear on the search results page of the query. It is worth noting that some web crawlers may behave differently from others. For example, some may use different factors to crawl when deciding which pages are most important.

when a user submits a query for related keywords, the search engine’s algorithm will obtain the data.

fetching starts with a known URL. These are established web pages, and there are various signals that lead web crawlers to these pages. These signals can be.

- Backlink: the number of times a website links to it

- Visitor: how much

- is the traffic to this page?

Domain name weight: the overall quality of the domain name

then they store the data in the search engine index. When a user initiates a search query, the algorithm gets the data from the index and appears on the search engine results page. This process can happen in milliseconds, which is why the results often occur quickly.

, as a webmaster, you can control which robots crawl your website. This is why it is important to have a list of reptiles. This is the robots.txt protocol that exists in each web server, and it directs the crawler to new content that needs to be indexed.

based on what you enter in the robots.txt protocol of each page, you can tell the crawler to scan or avoid indexing the page in the future. By understanding what web crawlers are looking for in their scans,

can learn how to better locate your content for search engines.

what are different types of web crawlers? There are many tools with different functions to choose from in the

market, but they all fall into two categories.

- Desktop Crawler: these tools are installed and stored on your computer.

- Cloud Crawler: these tools use cloud computing and do not need to be stored locally on your computer.

the type of tools you use will depend on your team’s needs and budget. In general, choosing a cloud-based option will allow more collaboration because the program does not need to be stored on a personal device. Once

is installed, you can set the crawler to run at specific intervals and generate reports as needed.

in addition, when you start thinking about compiling your list of crawlers, you can classify web crawlers on a commercial basis, including:

- internal crawlers: these are crawlers designed by the company’s development team to scan their websites. Typically, they are used for site auditing and optimization.

- commercial crawlers: these are custom crawlers, such as Screaming Frog, that companies can use to crawl and effectively evaluate their content.

- open source crawlers: these are free-to-use crawlers built by various developers and hackers around the world. It is important for

to understand the different types of crawlers available so that you know which types you need to use to achieve your business goals.

, the most common web crawler in

, does not have a crawler that does all the work for every search engine.

instead, there are a variety of web crawlers to evaluate your web pages and scan their content for all search engines provided by users around the world.

, let’s take a look at some of the most common web crawlers today (generally, we should call them search engine crawlers).

1. Googlebot

Googlebot is Google’s general-purpose web crawler that crawls sites that will be displayed on Google’s search engine.

Googlebot is the web crawler software used by Google, which is responsible for building searchable web indexes for Google search engines. Googlebot includes two different types of web crawlers, Googlebot Desktop and Googlebot Mobile.

Googlebot indexes the site to provide the latest Google results

because both follow the same unique product tag (called user agent tag) written in the robots.txt of each site. The user agent for Googlebot is just “Googlebot”.

Googlebot starts working and usually visits your site every few seconds (unless you block it in the site’s robots.txt). Backups of scanned pages are saved in a unified database called Google Cache. This enables you to view the old version of your website. In addition,

, Google Search Console is another tool that webmasters use to understand how Googlebot crawls their sites and optimizes their pages.

2. Bingbot

Bingbot was created by Microsoft in 2010 to scan and index URL to ensure that Bing provides relevant and up-to-date search engine results for users of the platform.

bingbot is a network crawling robot (a type of network robot) deployed by Microsoft to provide Bing. It collects documents from the Internet to create a searchable index for Bing. It replaced msnbot as the main Bing spider in October 2010.

Bingbot provides relevant search engine results for Bing

, like Googlebot, developers or marketers can define in the robots.txt of their website whether to approve or deny the proxy identifier “bingbot” to scan their website.

In addition, they have the ability to distinguish between mobile-first indexed crawlers and desktop crawlers because Bingbot has recently been replaced with a new proxy type. This, along with the Bing webmaster tool, gives webmasters more flexibility to show how their sites are found and displayed in search results.

3. Yandex Bot

Yandex Bot is a crawler specifically targeted at the Russian search engine Yandex. This is one of the largest and most popular search engines in Russia.

Yandex Bot indexes the Russian search engine Yandex.

webmasters can use robots.txt files to enable Yandex Bot to access their website pages. In addition,

can add Yandex.Metrica tags to specific pages, re-index pages in Yandex webmasters, or publish the IndexNow protocol, a unique report that identifies new, modified, or deactivated pages.

4. Apple Bot

Apple commissioned Apple Bot to crawl and index web pages for Apple’s Siri and Spotlight recommendations.

Apple Bot is

Apple Bot, the web crawler of Apple’s Siri and Spotlight, taking into account a number of factors when deciding which content to promote to Siri and Spotlight recommendations. These factors include user participation, relevance of search terms, number / quality of links, location-based signals, and even web design.

5. DuckDuck Bot

DuckDuckBot is DuckDuckGo’s web crawler that provides “seamless privacy protection on web browsers”.

DuckDuck Bot crawls for privacy-conscious sites

webmasters can use DuckDuckBot API to see if DuckDuckBot has crawled their sites. As it fetches, it updates the DuckDuckBot API database with the nearest IP address and user agent.

this helps webmasters identify any impostors or malicious robots that try to be associated with DuckDuck Bot.

6. Baidu Spider

Baidu is the leading search engine in China, while Baidu Spider is the only crawler on the site.

Baidu Spider is a Baidu crawler, is a Chinese search engine

Google is banned in China, so if you want to enter the Chinese market, it is very important to use Baidu Spider to crawl your website. To identify the Baidu spider that crawled your site,

looks for the following user agents: baiduspider, baiduspider-image, baiduspider-video, and others.

if you are not doing business in China, it may make sense to stop Baidu Spider in your robots.txt script. This will prevent Baidu spiders from crawling your site, thereby eliminating any chance that your page will appear on the Baidu search engine results page (SERP).

7. Sogou Spider

Sogou is a Chinese search engine and is said to be the first search engine with an index of 10 billion Chinese web pages.

Sogou Spider is one of Sogou’s crawlers

if you do business in the Chinese market, this is another popular search engine crawler you need to know. Sogou spiders follow the exclusive text and crawl delay parameters of the robot.

is like Baidu Spider, if you don’t want to do business in the Chinese market, you should disable this spider to prevent the website from loading too slowly.

8. Facebook External Hit

Facebook External Hit, also known as Facebook Crawler, crawls the HTML of an application or website shared on Facebook.

Facebook External Hit indexes

for link sharing, which enables social platforms to generate a shareable preview of each link posted on the platform. The emergence of titles, descriptions and thumbnails benefits from crawlers.

if the crawl is not performed within a few seconds, Facebook will not display the content in the custom snippet generated before sharing.

9. Exabot

Exalead is a search engine company founded in France in 2000. Its search tools include voice search, language monitoring and location search, and data clustering.

Exabot is a crawler of Exalead, a search platform company,

Exabot is the crawler of their core search engine based on CloudView products.

, like most search engines, Exalead takes into account both backlinks and content on web pages when ranking. Exabot is the user agent for Exalead’s robot. The robot creates a “master index” that compiles the results that search engine users will see.

10. Swiftbot

Swiftype is a search engine customized for your website. It combines “the best search techniques, algorithms, content intake frameworks, clients and analysis tools”.

Swiftype is a software that can power your site search

if you have a complex website with many pages, Swiftype provides a useful interface for cataloging and indexing all your pages.

Swiftbot is a web crawler for Swiftype. However, unlike other robots, Swiftbot only crawls the sites their customers require.

11. Slurp Bot

Slurp Bot is Yahoo’s search robot that crawls and indexes web pages for Yahoo.

Slurp Bot powers Yahoo’s search engine results

this crawl is essential for Yahoo.com and its partner sites, including Yahoo News, Yahoo Finance and Yahoo Sports. Without it, the list of related sites would not appear. The indexed content of

helps to provide users with a more personalized web experience and more relevant results.

SEOers needs to know 8 commercial crawlers

now that you have 11 most popular robots on your list, let’s take a look at some common commercial crawlers and professional SEO tools.

1. Ahrefs Bot

Ahrefs Bot is a web crawler that compiles and indexes 12 trillion linked databases provided by the popular SEO software Ahrefs.

Ahrefs Bot is the SEO platform Ahrefs index website

Ahrefs Bot visits 6 billion websites a day, and is considered to be the “second largest active crawler” after Googlebot.

Like other robots, Ahrefs Bot follows the robots.txt function and the allow / disable rules in the code of each website.

2. Semrush Bot

Semrush Bot enables Semrush, the leading search engine software, to collect and index website data for use by its customers on its platform.

Semrush Bot is the crawler

that Semrush uses to index websites. This data is used in Semrush’s public backlink search engine, website audit tool, backlink audit tool, link building tool and writing assistant.

crawls your site by compiling URL lists of web pages, visiting them, and saving some hyperlinks for future visits.

3. Moz crawler Rogerbot

Rogerbot is the crawler of the leading SEO website Moz. This crawler collects content specifically for Moz Pro Campaign site testing.

Moz is a popular SEO software that deploys Rogerbot as its crawler

Rogerbot follows all the rules set out in the robots.txt file, so you can decide whether or not to block / allow Rogerbot to scan your site.

due to the versatility of Rogerbot, webmasters will not be able to search static IP addresses to see which pages Rogerbot crawled.

4. Screaming Frog

Screaming Frog is a crawler that SEO professionals use to detect their own sites and identify areas of improvement that will affect their search engine rankings.

Screaming Frog is a help to improve SEO crawler

once you start crawling, you can review real-time data and determine invalid links or need to improve your page title, metadata, robot, repetitive content, and so on.

in order to configure crawl parameters, you must purchase a Screaming Frog license.

5. Lumar (formerly Deep Crawl)

Lumar is a “centralized command center for maintaining the technical health of your website”. Through this platform, you can start crawling the website to help you plan the structure of the website.

Deep Crawl, which has been renamed Lumar, is a website intelligent crawler,

Lumar, which prides itself on being the “fastest web crawler on the market” and boasts that it can crawl 450URL per second.

6. Majestic

Majestic focuses primarily on tracking and identifying URL backlinks.

Majestic crawler enables SEO to detect backlink data

the company prides itself on having “one of the most comprehensive sources of backlink data on the Internet”, emphasizing that its historical index has increased from 5 years of links to 15 years in 2021.

the crawler on the site provides all this data to the company’s customers.

7. cognitiveSEO

cognitiveSEO is another important SEO software that many professionals use.

congnitiveSEO provides a powerful

cognitiveSEO crawler that enables users to perform comprehensive site testing, which will inform their site architecture and overall SEO strategy.

the robot will crawl all pages and provide “fully customized data sets”, which is unique to the end user. The dataset will also provide users with advice on how they can improve the site for other crawlers-both affecting rankings and preventing unnecessary crawlers.

8. Oncrawl

Oncrawl is the “industry leading SEO crawler and log analyzer” for enterprise customers.

Oncrawl is another SEO crawler software that provides unique data.

users can set “crawl profiles” to create specific parameters for crawling. You can save these settings (including the starting URL, crawl limit, maximum crawl speed, and so on) to easily run the crawl again with the same established parameters.

do I need to intercept and protect myself from malicious web crawlers?

not all reptiles are good. Some may have a negative impact on your page speed, while others may attempt to hack into your site or be malicious.

this is why it is important to know how to prevent crawlers from entering your site.

by creating a list of crawlers, you will know which reptiles are good ones to pay attention to. You can then remove those suspicious crawlers and add them to your blocking list (WordPress can be implemented through the Spider Analyser plug-in). How

intercepts malicious web crawlers

with your crawler list, you can determine which robots you want to approve and which ones you need to block. The first step in

is to browse your list of crawlers and define the user agent and full agent strings associated with each crawler, as well as its specific IP address. These are the key identification factors related to each robot.

has a user agent and IP address, so you can match it in your website records through DNS query or IP matching (you can provide spider query tool, enter the crawler’s IP address, and then quickly query to identify whether the IP address comes from a real spider or crawler). If they don’t exactly match, you may have a malicious robot trying to impersonate a real robot.

then, you can stop the fake by using robots.txt site tags to adjust permissions, or by quickly intercepting all spiders and crawlers you don’t need through the Spider Analyser plug-in.

summary

web crawler is very useful for search engines, and it is also important for website content operators (SEOers) or webmasters to understand.

ensures that your site is correctly crawled by the right crawler, which is important for your business success. By keeping a list of crawlers, you can know which crawlers need to pay attention to when they appear in your site log.

when you follow the advice of commercial crawlers to improve the content and speed of your site, you will make it easier for crawlers to access your site and index the correct information for search engines and consumers looking for information.